AI for Quality Engineering

How to use AI to break the shackles that have held testing teams back for decades.

The Path #9

Written by Nate Buchanan, COO & Co-Founder, Pathfindr

Continuing our “AI in [Insert Industry Here]” series that we began in last week’s edition with our deep-dive on how AI can make a difference in private equity, this week we’ll focus on a capability instead of an industry.

Today we’re talking about how AI can radically transform quality engineering (QE) - what used to be called “testing” or “quality assurance” - and eradicate some stubborn problems that have been plaguing these teams for years. Before we begin though, it’s worth having a bit of background on why we chose this topic. I promise this won’t be like one of those recipes you read online where you scroll past the person’s life story before you start seeing ingredients.

Before co-founding Pathfindr, I spent nearly half of my consulting career leading global QE teams. I became intimately familiar with the trials and tribulations of checking code for defects. It’s a thankless job, similar to a goalkeeper on a soccer team - people only pay attention to you when you fail and let a ball (or bug) through. Otherwise, they take you for granted.

What’s more, the evolution of software delivery methodologies over the past 10-15 years minimized the QE role. Recently there’s been a shift towards developers owning more quality responsibilities, to the point that a full-time tester or QE - as opposed to a full-stack developer who is also expected to test their code - is only engaged right before code is supposed to go into production (if at all).

This isn’t a good thing. Many developers don’t like testing, and there is a sense in the industry that software is getting worse. To wit:

I believe that this lack of emphasis on testing stems - at least in part - from how tiresome it is. Consider the following challenges that a testing team often experiences:

Poorly written requirements - requirements should be “testable” (i.e., based on how it’s written you can prove it has been met or not met by running a test) but many testers are asked to verify unclear requirements. Sometimes, they are asked to test that an application works without having any requirements at all.

Lack of technical documentation - to properly check that an application is working, requirements are necessary, but not sufficient. Technical documentation - such as a system architecture diagram - is also helpful to QEs who want to understand how the system “hangs together” so that they can anticipate failure modes and different ways that performance issues might arise. Unfortunately, this kind of documentation is often incomplete or unavailable on many projects.

Data and environment issues - these are the bane of every tester’s existence. I have yet to meet a QE leader whose test data and environments are completely under control. If a software test was a car, the data would be the fuel and the environment would be the road. It’s not going anywhere without both of those things.

Cumbersome defect management processes - defects are a necessary part of the testing process. If you’re finding them, it means it’s working! But when it comes to managing defects - creating them, tagging them, triaging them, getting them fixed, and retesting them - teams are often beset with a lack of standardization in defect content and a haphazard approach to getting them closed out.

Lack of traceability for production incidents - one of the most important parts of a tester’s job is learning how to improve their approach when a defect ends up in production. The challenge is that often the process for managing production incidents is not connected to the defect management process or to features and requirements further up the chain. This makes it difficult for testers to trace the source of a problem or identify which test scripts need to be updated.

In the aggregate, these issues make testing a difficult job. Fortunately, there are many ways that AI can help, starting at the beginning of the “Quality Funnel”.

Requirement Validation

AI can check that the requirements written by BAs are testable, using a model that has been trained to recognize “good” requirements to review ones created for a particular project to recommend improvements. In some cases, you could also use an LLM to reverse engineer requirements from the code itself, though this isn’t recommended as a software development best practice.

Architecture Review

You can use AI for a wide range of things relative to a system’s architecture, including:

Creating an architecture diagram from requirements or code (useful for mapping dependencies between different systems or projects)

Helping a non-technical team member understand how a system works in plain English (or any other language for that matter)

Using architecture to predict potential failure points, test data requirements, or even generate test cases

Test Planning

Thinking of all the different ways a system could fail and creating test cases for each of them can be a very time-consuming exercise. It also requires an element of critical thinking and creativity that are sometimes difficult skills to find when hiring technical testers. AI can help bridge the gap by generating many different test case permutations that can then be reviewed by a human to provide another layer of critical thinking, ensuring that all the necessary scenarios are covered.

Test Preparation

There’s a massive opportunity in using generative AI to create test data. The era of taking a snapshot of production data, masking it - or (gasp!) not - and using it in lower environments should be a thing of the past. Many of the test data management tool providers are working to introduce AI into their applications, but it’s also possible to use Python-based randomizers to build a DIY solution if you have the right skills in-house.

Defect Creation & Management

Raising defects is often one of the most tedious parts of a tester’s job. AI can be integrated into the defect management process at any level - from raising defects in an application like Jira using a simple chat interface, to a fully automated solution that will automatically create defects and link them to a specific test case based on the nature of the failure.

Root Cause Analysis

Many companies track production incidents, but few of them take the time to analyze the failures and link them to related defects or test scripts that “should have” caught them. In fact, incident data is often unstructured and difficult to understand. LLMs can help teams make sense of these confusing data sets and provide insights on how to improve test scripts and practices so that similar incidents are more likely to be removed from the pipeline as defects before go-live.

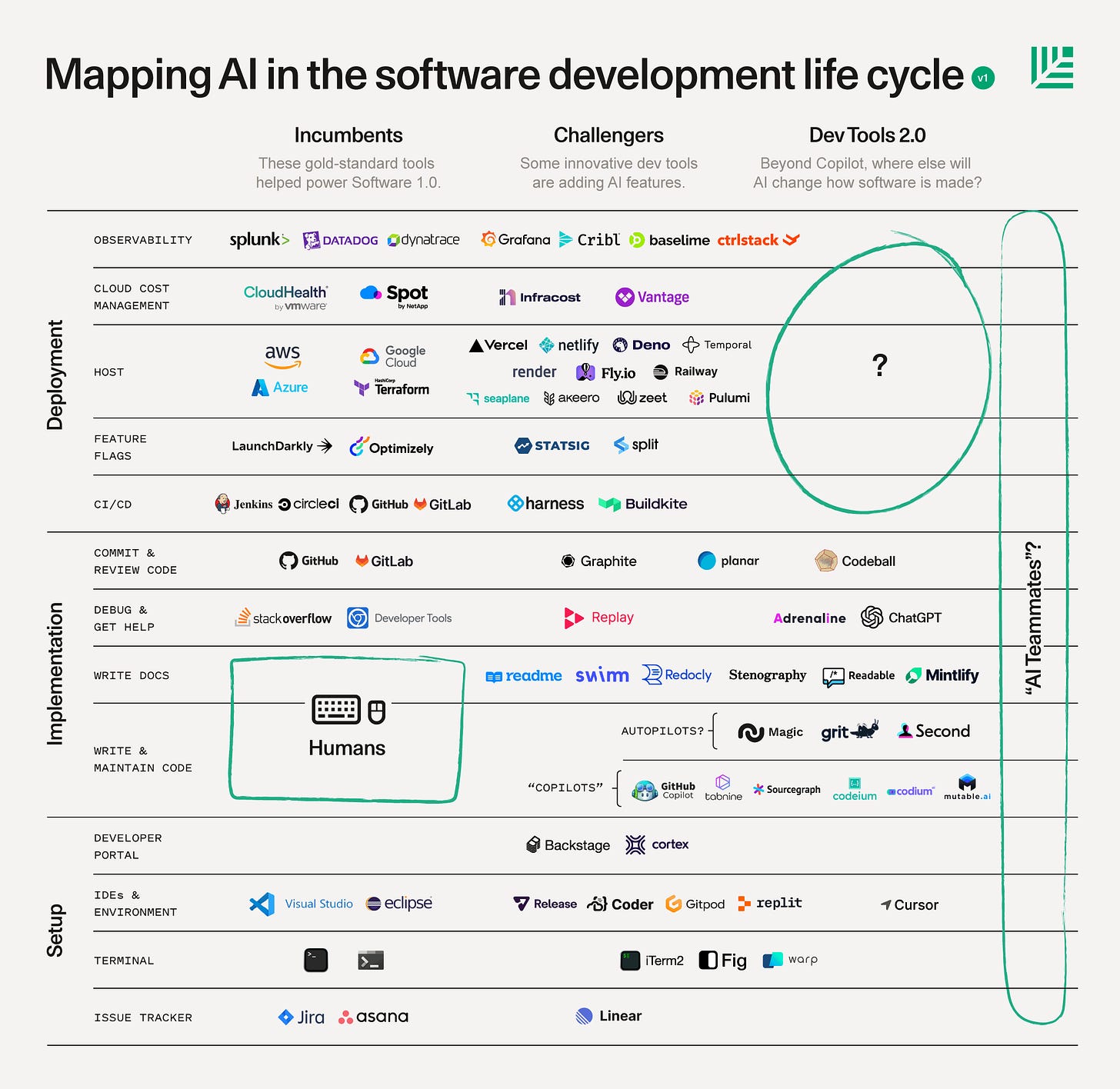

You might be asking: where to begin? There are many startups and established software vendors in the testing market who have integrated AI capabilities into their products. The below visual from Sequoia Capital provides a good snapshot of who’s doing what. As you can see, there is still a lot of room for innovation, and I’m excited to see what features will emerge in the coming months and years.

For all those testers out there who are tired of dealing with the same issues day in and day out, I hope this post has given you some ideas as to how today’s widely-available AI technology can make your job just a little bit easier. And if you’d like to discuss AI in testing (or just vent about your developers or BAs), don’t hesitate to reach out to me. As much as I claim to have left the QE life behind, I still like to talk shop now and again.

Until next week,

Nate